Genre

Introduction

Genre may be very simple or very complex. Literary studies recognize a number of stable categories that, by and large, make sense: fiction/non-fiction and prose/lyric/drama remain essential. Then, there are also numerous categories that may not be precise, but still cover a lot of ground, such as the romance or the detective novel.

With digital corpora, it is possible to sort the corpora according to genre, and, for example, study the representation of certain topics. But beyond their ability to sort by using metadata, which not always satisfying as a work can belong to more than one genre, computational methods may be used in two primary ways to study genre:

1) to examine whether the categories actually hold up and whether the texts we assume belong to a genre are as distinct from other texts as we thought;

2) to develop a new idea of what genre is and find other patterns in literary history than those which have become generally recognized.

These are not easy tasks that may be accomplished out of the box, but this is a field where a number of researchers have generated new and highly interesting results for many to consider.

Applications

Elementary

There are several tools available readily and freely online, such as AntConc and Voyant Tools, that enable their users to perform simple and yet illuminating analyses and comparisons, e.g. calculating average sentence length, most used words, average length of words and type-token ratio. One could for example consider if certain words are more likely to occur in Shakespeare’s sonnets than they are in his plays or compare if his sentences on average are shorter or longer in his tragedies than they are in his comedies.

An example of an excellent and free program that is useful for analysing genre is the aforementioned AntConc, a tool that detects which words are used more often than others and allows the user to discover which words are likely to be used in the vicinity of a word that the analyst chooses. A particularly useful function is its ability to incorporate a reference corpus, which is a collection of texts either downloaded online or created by the analyst that can then be compared with another text or a whole body of texts. In the case of Shakespeare, you could for example download his sonnets and all of his plays, use the latter as the reference corpus, and then discover which words are more typical of Shakespeare the poet than Shakespeare the playwright. For a short guide on how to use AntConc and its reference corpus function, see Froehlich, "Corpus Analysis with Antconc”.

Advanced

Machine learning models can be trained to classify text into different genres. This can be useful with large corpuses where hand-labeling the genre of every single text will take too long. Furthermore, looking deeper into the features that are successful in distinguishing literary genres can further enlighten researchers on typical characteristics of different genres.

Supervised learning algorithms are trained using input data where the outcome classification of the training data is known in advance. In this case, the outcome class would therefore be the genre of the book, predicted by features such as title, vocabulary richness, length, or simply text extracts from the book that are fed to the classification model. The performance of such a model can therefore be measured over a set of benchmark classifications, making this a “right/wrong” approach that can be useful when the genres are known in advance. To understand how supervised classification works, try to predict book genres based on Goodreads descriptions. You can find a useful guide here and use this corresponding GitHub Repository containing the necessary code for conducting the analysis.You can also replicate the study by Worsham & Kalita (2018) to explore how different model designs perform.

Unsupervised learning is an open-ended/exploratory approach that does not assume any “correct” classification output. The algorithms are designed to go through the input and find similarities in it. When going through the documents in the dataset, the model employs a distance metric to detect which documents share similar features and clusters those together. The desired number of classes can be defined in advance, e.g. by means of k-clustering (see e.g. this GitHub repository for k-means clustering of anthems). The unsupervised approach allows for revealing patterns and classes that weren’t defined beforehand. Try applying the method to the same dataset you used for supervised learning, excluding the class labels.

However, a digital humanities analysis should go deeper into analysing why the genre clusters emerge. What kind of words or other linguistic features separate the genres? If you have genre information available for your corpus, you can transform it into a document-term matrix and explore terms (i.e., words) that separate the clusters, following the guidelines by Karsdorp, Kestemont, and Riddell.

Resources

Scripts and sites

-

Antconc, an open-source corpus analysis software for concordancing and text analysis.

- Voyant Tools, an analysis and visualisation environment for digital texts.

- A GitHub Repository for deep learning tools used in Worsham & Kalita (2018).

-

A tutorial on building neural network machine learning models for genre classification, and the code on GitHub.

- Unsupervised classification of national anthems.

- Exploring Texts using the Vector-Space Model, Karsdorp, Kestemont, and Riddell (2022).

Articles

-

Kim, E., Padó, S., & Klinger, R. (2017). Prototypical Emotion Developments in Literary Genres. In Proceedings of the Joint SIGHUM Workshop on Computational Linguistics for Cultural Heritage, Social Sciences, Humanities and Literature. (pp. 17-26). http://www.romanklinger.de/publications/kim2017.pdf

- Underwood, T., Bamman, D., & Lee, S. (2018). The Transformation of Gender in English-Language Fiction. Journal of Cultural Analytics. https://doi.org/10.22148/16.019

- van Cranenburgh, A. (2018, August). Cliché Expressions in Literary and Genre Novels. In Proceedings of the Second Joint SIGHUM Workshop on Computational Linguistics for Cultural Heritage, Social Sciences, Humanities and Literature. (pp. 34-43). https://www.aclweb.org/anthology/W18-4504.pdf

-

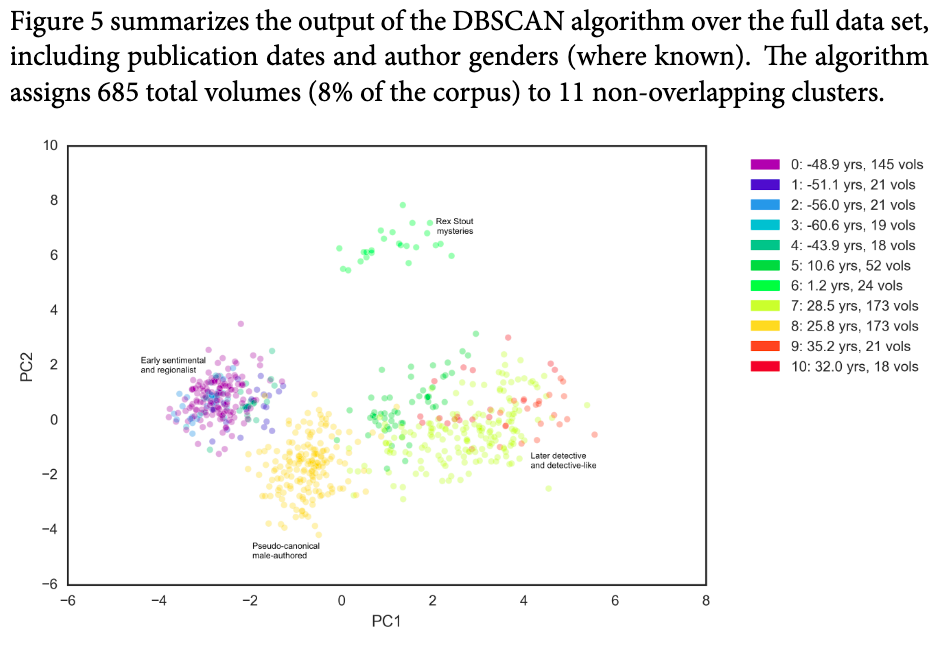

Wilkens, M. (2016). Genre, Computation, and the varieties of Twentieth-Century U.S. Fiction. Journal of Cultural Analytics. https://doi.org/10.22148/16.009

- Worsham, J., & Kalita, J. (2018, August). Genre identification and the compositional effect of genre in literature. In Proceedings of the 27th International Conference on Computational Linguistics. (pp. 1963-1973). https://www.aclweb.org/anthology/C18-1167/