Translation

Introduction

With the growing influence of machine learning and artificial intelligence, there is reason to take some pride in how difficult it would be for a machine to perform better at various tasks than a human being.

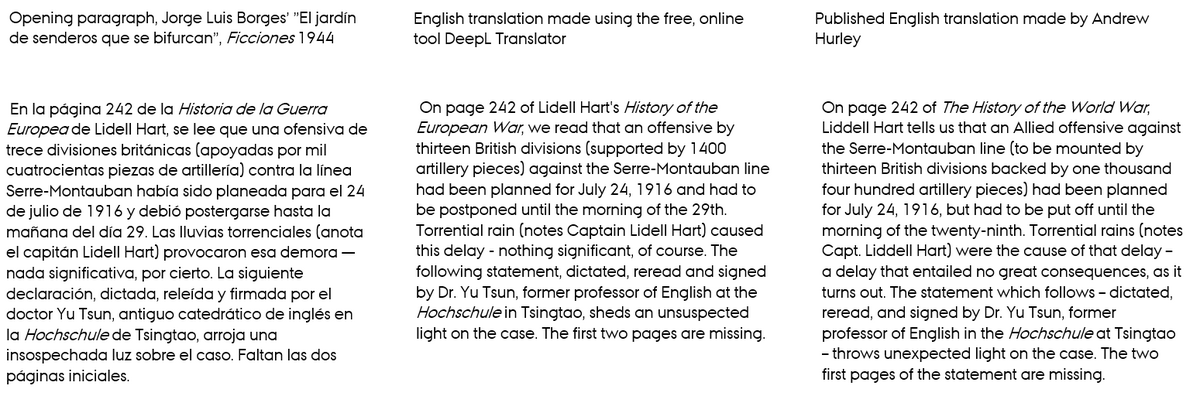

Chess is long gone (although humans still compete among themselves) but many other things are very hard to model. Translations may be one of the first places in literary cultures where computers have a real effect. The use of automated translations is a factor in modern translation practice, at least for some translators, more often of non-fiction, but just as a dictionary is a tool that can be used with care, so is automated translation capable of making the work of the translator better.

The quality of automated translation has been improved dramatically in the past years with the shift to neural networks rather than databases as the underlying technology for carrying out the task of the translator. Readable texts can thus be produced in seconds, although they are often fraught with clumsy mistakes. But the Turing test, a method for testing if a machine can think, for automated translation is getting closer to being passed regularly. There is an immediate use of this, namely that the access to minor literature ‒ or even not so minor ‒ will change, and for the purpose of understanding themes and general composition, there can be machine made translations that are useful.

Then there is the automated translation as an input to a discussion of literary quality: how do we determine whether an automated translation is better or worse than one made by humans? What are the criteria? How do we argue as close readers that one is better than the other? This is an instructive challenge to a discipline whose values are often tacit.

Finally, translation is a field where human-machine cooperation may work at its best, as a human translator can easily spot the mistakes made by the machine, but also learn from some of the decisions and probably improve the desired consistency of the work.

Applications

Elementary

You can make your own translations of literary works and compare extracts of your own translations with computer-generated translations made by e.g. Google Translate or DeepL. There exist various digital tools, such as this list of tools for quality and productivity, that might be helpful in the translation process. Try for instance to have your friends, family, or fellow students read both the human made and the computer generated translation and see if they can tell them apart. It might also be interesting to investigate in what aspects the automated translation differs from the human made.

Evaluating the quality of translations in an automated way can be difficult, but using a free grammar checker software such as Grammarly, Ginger, or Hemingway, is one possible starting point to get an overview of divergences as well as the type of errors frequently appearing in the computer generated translation. Hemingway is not only a grammar checker but also a style checker providing an overview of the complexity of an author’s writing style as well as the overall readability of a text, which could also be taken into account when comparing the translations made by a human and a computer, respectively. However, these approaches are still crude and you should also rely on your own knowledge and skills when evaluating translations.

Advanced

Translation and the assessment of its quality is a complex task, involving several linguistic and extra-linguistic factors. In addition to grammar and syntax to make comprehensible sentences, abstract dimensions such as differing metaphors, connotations, and synonyms between languages need to be considered when translating literature. The first machine translation (MT) approaches involved statistical methods, where translations were based on statistical co-occurrences of words in a bilingual corpus and rule-based approaches, using dictionaries and grammars providing linguistic information about source and target languages for the model. The recent advances in neural machine translation using artificial neural networks have now outperformed these approaches, and the field is developing rapidly (Stahlberg, 2020).

It is possible to train your own machine translation model if you want to deepen your understanding in machine translation (see tutorial links under the scripts section). The next step is to analyse the translation quality, for instance with a BLEU score between 0 and 1. Machine translation quality of different machine translations can for instance be compared with MT-ComparEval. It is possible to clone their GitHub repository, and use the tool to compare the translations of MT models with different training and architecture.

Resources

Scripts and sites

-

An example of Neural Machine Translation (NMT) on GitHub.

-

Sequence-to-sequence model implementation with TensorFlow on GitHub.

-

A GitHub Repository for training and comparing different neural network models on English-French translations.

- MT-ComparEval, an open-source tool for comparison and evaluation of machine translations.

Articles

-

Beens, P. (2018, February 27) Longread: Alice in Machine Translation Land. Vertaalt. https://www.vertaalt.nu/blog/longread-alice-in-machine-translation-land/

-

Castilho, S., Doherty, S., Gaspari, F., & Moorkens, J. (2018). Approaches to human and machine translation quality assessment. In Translation Quality Assessment (pp. 9-38). Springer, Cham. http://dx.doi.org/10.1007/978-3-319-91241-7_2

-

Isabelle, P., & Kuhn, R. (2018). A Challenge Set for French--> English Machine Translation. arXiv preprint arXiv:1806.02725. https://arxiv.org/abs/1806.02725v2

-

Stahlberg, F. (2020). Neural machine translation: A review. Journal of Artificial Intelligence Research, 69, 343-418. http://dx.doi.org/10.1613/jair.1.12007

- Sudarikov, R., Popel, M., Bojar, O., Burchardt, A., & Klejch, O. (2016). Using MT-ComparEval. Translation Evaluation: From Fragmented Tools and Data Sets to an Integrated Ecosystem, 76-82. http://www.cracking-the-language-barrier.eu/wp-content/uploads/Sudarikov-etal.pdf